I’ve tried to install Hadoop through Cloudera, but no luck, installation always failed with server crash and after crash the service couldn’t start again, even after rebooting. So I decide to install Hadoop manually.

1. Install hadoop on the AWS server

ubuntu@ip-xxx-xxx-xxx-xxx:~$ wget http://apache.mirror.gtcomm.net/hadoop/common/current/hadoop-2.6.0.tar.gz

ubuntu@ip-xxx-xxx-xxx-xxx:~$ tar -xvzf hadoop-2.6.0.tar.gz

ubuntu@ip-xxx-xxx-xxx-xxx:~$ ln -s hadoop-2.6.0 hadoop

2. Install java

ubuntu@ip-xxx-xxx-xxx-xxx:~$ sudo apt-get update

ubuntu@ip-xxx-xxx-xxx-xxx:~$ sudo apt-get install default-jdk

ubuntu@ip-xxx-xxx-xxx-xxx:~$ java -version

3. Configure environment

ubuntu@ip-xxx-xxx-xxx-xxx:~$ sudo nano /etc/environment

...

JAVA_HOME="/usr/lib/jvm/java-7-openjdk-amd64"

PATH="/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/games:/usr/local/game$

ubuntu@ip-xxx-xxx-xxx-xxx:~$ sudo nano ~/.bashrc

...

# JAVA

export JAVA_HOME="/usr/lib/jvm/java-7-openjdk-amd64"

export PATH=$PATH:$JAVA_HOME/bin

# HADOOP

export HADOOP_PREFIX=/home/ubuntu/hadoop

export HADOOP_HOME=/home/ubuntu/hadoop

export PATH=$PATH:$HADOOP_HOME/bin

export PATH=$PATH:$HADOOP_HOME/sbin

export HADOOP_MAPRED_HOME=${HADOOP_HOME}

export HADOOP_COMMON_HOME=${HADOOP_HOME}

export HADOOP_HDFS_HOME=${HADOOP_HOME}

export HADOOP_CONF=${HADOOP_HOME}/etc/hadoop

export YARN_HOME=${HADOOP_HOME}

export YARN_CONF=${HADOOP_HOME}/etc/hadoop

# HADOOP Native Path

export HADOOP_COMMON_LIB_NATIVE_DIR=${HADOOP_HOME}/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib/native"

ubuntu@ip-xxx-xxx-xxx-xxx:~$ source ~/.bashrc

ubuntu@ip-xxx-xxx-xxx-xxx:~$ echo $HADOOP_HOME

/home/ubuntu/hadoop

ubuntu@ip-xxx-xxx-xxx-xxx:~$

If you don’t know where is the installed java path. You can find it by this.

ubuntu@ip-xxx-xxx-xxx-xxx:~$ sudo update-alternatives --config java

or this

ubuntu@ip-xxx-xxx-xxx-xxx:~$ which java

And repeat 1 ~ 3 for 3 machines (Secondaray name node and two slaves).

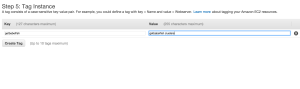

Or make an image of this instance then duplicate it.

I just figure out about the AMIs while struggling with Hadoop installation.

It is quite convenient for multiple machine installation.

4. Configure SSH for Master to connect Slaves without password

ubuntu@ip-xxx-xxx-xxx-xxx:~$ ssh-keygen -t dsa

Generating public/private dsa key pair.

Enter file in which to save the key (/home/luser/.ssh/id_dsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/luser/.ssh/id_dsa.

Your public key has been saved in /home/luser/.ssh/id_dsa.pub.

The key fingerprint is:

f3:77:56:58:a8:bb:08:59:67:15:2c:0e:1d:d0:40:a3 ubuntu@ip-xxx-xxx-xxx-xxx

ubuntu@ip-xxx-xxx-xxx-xxx:~$ chmod 600 $HOME/.ssh/id_dsa*

After generate key pair, add the public key to ‘authorized_keys’ and then check the key works properly.

ubuntu@ip-xxx-xxx-xxx-xxx:~$ cat /home/ubuntu/.ssh/id_rsa.pub >> /home/ubuntu/.ssh/authorized_keys

ubuntu@ip-xxx-xxx-xxx-xxx:~$ ssh localhost

Welcome to Ubuntu 14.04.1 LTS (GNU/Linux 3.13.0-36-generic x86_64)

* Documentation: https://help.ubuntu.com/

System information as of Thu Dec 11 21:33:54 UTC 2014

System load: 0.0 Processes: 97

Usage of /: 2.6% of 62.86GB Users logged in: 0

Memory usage: 5% IP address for eth0: 172.31.22.234

Swap usage: 0%

Graph this data and manage this system at:

https://landscape.canonical.com/

Get cloud support with Ubuntu Advantage Cloud Guest:

http://www.ubuntu.com/business/services/cloud

Last login: Thu Dec 11 21:33:54 2014 from aftr-37-201-193-43.unity-media.net

ubuntu@ip-xxx-xxx-xxx-xxx:~$

To communicate between Master and other machines, you just copy the ‘authorized_keys’ to the others.

ubuntu@ip-xxx-xxx-xxx-xxx:~$ scp -i ~/.ssh/name.pem ~/.ssh/authorized_keys ubuntu@SECONDARAY_NAME_NODE_ADDRESS:~/.ssh/

ubuntu@ip-xxx-xxx-xxx-xxx:~$ scp -i ~/.ssh/name.pem ~/.ssh/authorized_keys ubuntu@SLAVE1_ADDRESS:~/.ssh/

ubuntu@ip-xxx-xxx-xxx-xxx:~$ scp -i ~/.ssh/name.pem ~/.ssh/authorized_keys ubuntu@SLAVE2_ADDRESS:~/.ssh/

refer

https://macnugget.org/projects/publickeys/

http://haruair.com/blog/1827

5. Hadoop Cluster Setup – hadoop-env.sh

ubuntu@ip-xxx-xxx-xxx-xxx:~$ nano $HADOOP_CONF/hadoop-env.sh

#replace this

export JAVA_HOME=${JAVA_HOME}

#to this

export JAVA_HOME="/usr/lib/jvm/java-7-openjdk-amd64"

6. Hadoop Cluster Setup – core-site.xml

ubuntu@ip-xxx-xxx-xxx-xxx:~$ mkdir hdfstmp

ubuntu@ip-xxx-xxx-xxx-xxx:~$ nano $HADOOP_CONF/core-site.xml

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://MASTER_SERVER_ADDRESS:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/home/ubuntu/hdfstmp</value>

</property>

</configuration>

The MASTER_SERVER_ADDRESS can be local address like “hdfs://ip-172-31-24-134.us-west-1.compute.internal” and also can be ‘localhost’ or ‘127.0.0.1’, but if you want to set up as a cluster, you should write public or private address instead of ‘localhost’ or ‘127.0.0.1’ because we gonna copy these setting files to slave machines.

7. Hadoop Cluster Setup – hdfs-site.xml

ubuntu@ip-xxx-xxx-xxx-xxx:~$ nano $HADOOP_CONF/hdfs-site.xml

<configuration>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/home/ubuntu/hdfstmp/dfs/name</value>

<final>true</final>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/home/ubuntu/hdfstmp/dfs/data</value>

<final>true</final>

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

</configuration>

8. Hadoop Cluster Setup – yarn-site.xml

ubuntu@ip-xxx-xxx-xxx-xxx:~$ nano $HADOOP_CONF/yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>MASTER_SERVER_ADDRESS:8025</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>MASTER_SERVER_ADDRESS:8030</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>MASTER_SERVER_ADDRESS:8040</value>

</property>

</configuration>

9. Hadoop Cluster Setup – mapred-site.xml

ubuntu@ip-xxx-xxx-xxx-xxx:~$ cp $HADOOP_CONF/mapred-site.xml.template $HADOOP_CONF/mapred-site.xml

ubuntu@ip-xxx-xxx-xxx-xxx:~$ nano $HADOOP_CONF/mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

10. Move configuration files to other machines

ubuntu@ip-xxx-xxx-xxx-xxx:~$ scp $HADOOP_CONF/hadoop-env.sh $HADOOP_CONF/core-site.xml $HADOOP_CONF/yarn-site.xml $HADOOP_CONF/hdfs-site.xml $HADOOP_CONF/mapred-site.xml ubuntu@SECONDARAY_NAME_NODE_ADDRESS:/home/ubuntu/hadoop/etc/hadoop/

ubuntu@ip-xxx-xxx-xxx-xxx:~$ scp $HADOOP_CONF/hadoop-env.sh $HADOOP_CONF/core-site.xml $HADOOP_CONF/yarn-site.xml $HADOOP_CONF/hdfs-site.xml $HADOOP_CONF/mapred-site.xml ubuntu@SLAVE1_ADDRESS:/home/ubuntu/hadoop/etc/hadoop/

ubuntu@ip-xxx-xxx-xxx-xxx:~$ scp $HADOOP_CONF/hadoop-env.sh $HADOOP_CONF/core-site.xml $HADOOP_CONF/yarn-site.xml $HADOOP_CONF/hdfs-site.xml $HADOOP_CONF/mapred-site.xml ubuntu@SLAVE2_ADDRESS:/home/ubuntu/hadoop/etc/hadoop/

11. Configure Slaves on Master machine

We will do this only Master machine because in hadoop-2.6.0, you don’t need to configure the ‘slaves’ file in slave machines.

ubuntu@ip-xxx-xxx-xxx-xxx:~$ nano $HADOOP_CONF/slaves

SLAVE1_ADDRESS

SLAVE2_ADDRESS

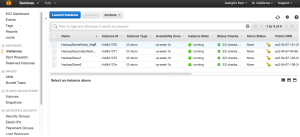

12. Hadoop Daemon Startup

On the master machine.

ubuntu@ip-172-31-22-234:~$ hdfs namenode -format

...

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at ip-172-31-22-234.us-west-1.compute.internal/172.31.22.234

************************************************************/

ubuntu@ip-172-31-22-234:~$ start-dfs.sh

Starting namenodes on [ip-172-31-22-234.us-west-1.compute.internal]

The authenticity of host 'ip-172-31-22-234.us-west-1.compute.internal (172.31.22.234)' can't be established.

ECDSA key fingerprint is a3:bf:43:66:11:52:40:cc:6c:00:ea:81:73:11:b2:a8.

Are you sure you want to continue connecting (yes/no)? yes

ip-172-31-22-234.us-west-1.compute.internal: Warning: Permanently added 'ip-172-31-22-234.us-west-1.compute.internal' (ECDSA) to the list of known hosts.

ip-172-31-22-234.us-west-1.compute.internal: starting namenode, logging to /home/ubuntu/hadoop-2.6.0/logs/hadoop-ubuntu-namenode-ip-172-31-22-234.out

ip-172-31-0-61.us-west-1.compute.internal: starting datanode, logging to /home/ubuntu/hadoop-2.6.0/logs/hadoop-ubuntu-datanode-ip-172-31-0-61.out

ip-172-31-0-60.us-west-1.compute.internal: starting datanode, logging to /home/ubuntu/hadoop-2.6.0/logs/hadoop-ubuntu-datanode-ip-172-31-0-60.out

Starting secondary namenodes [0.0.0.0]

The authenticity of host '0.0.0.0 (0.0.0.0)' can't be established.

ECDSA key fingerprint is a3:bf:43:66:11:52:40:cc:6c:00:ea:81:73:11:b2:a8.

Are you sure you want to continue connecting (yes/no)? yes

0.0.0.0: Warning: Permanently added '0.0.0.0' (ECDSA) to the list of known hosts.

0.0.0.0: starting secondarynamenode, logging to /home/ubuntu/hadoop-2.6.0/logs/hadoop-ubuntu-secondarynamenode-ip-172-31-22-234.out

ubuntu@ip-172-31-22-234:~$ start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /home/ubuntu/hadoop/logs/yarn-ubuntu-resourcemanager-ip-172-31-22-234.out

ip-172-31-0-60.us-west-1.compute.internal: starting nodemanager, logging to /home/ubuntu/hadoop-2.6.0/logs/yarn-ubuntu-nodemanager-ip-172-31-0-60.out

ip-172-31-0-61.us-west-1.compute.internal: starting nodemanager, logging to /home/ubuntu/hadoop-2.6.0/logs/yarn-ubuntu-nodemanager-ip-172-31-0-61.out

ubuntu@ip-172-31-22-234:~$ jps

2253 NameNode

2865 Jps

2466 SecondaryNameNode

2600 ResourceManager

ubuntu@ip-172-31-22-234:~$

Cool~ Looks working!

refer

http://blog.c2b2.co.uk/2014/05/hadoop-v2-overview-and-cluster-setup-on.html

http://stackoverflow.com/questions/4681090/how-do-i-find-where-jdk-is-installed-on-my-windows-machine